An Orwellian future is becoming a future reality more and more each day, and more so in the United States than anywhere else—a country supposedly meant to protect the Free World, but actually more of a space intended to protect corporations more than its people. Of the nine Big Tech companies in existence, six of them exist in the U.S., and as it stands, there are no regulatory practices limiting how much or how little these corporations are allowed to record our every move and then sell that data to government agencies for surveillance.

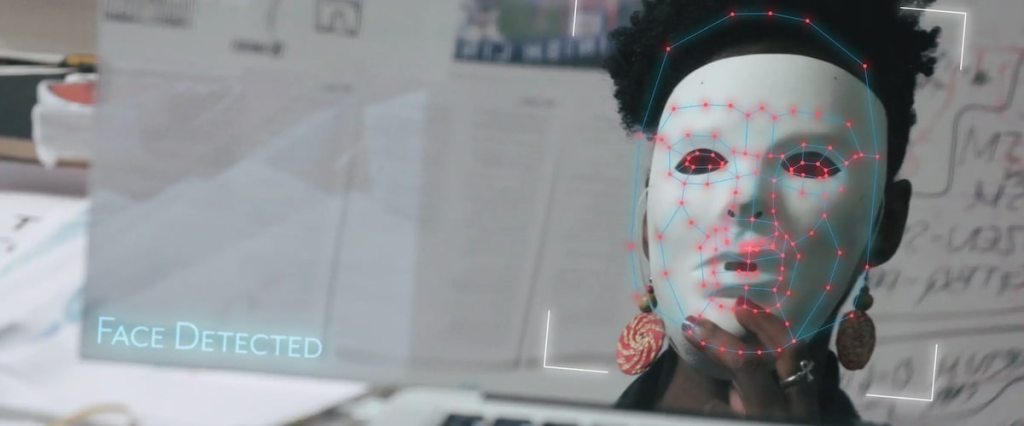

Throughout Coded Bias, a documentary that explores the racial biases in our everyday algorithms, biometric surveillances, and other data science technologies, I felt myself shudder in moments when people were walking down the street and their faces were being scanned before receiving whatever score or description the technology would give their appearance. We tell ourselves that we live in a democracy, that in the U.S. or anywhere else in the Western world that our governments would never use technology to oppress people, that we are not China and should be proud not to be. But as the film shows, we are no different from them—in fact, our only difference lies in the fact that China’s government is explicit about how they use their technology on their people. Unlike the European Union or the United Kingdom, the U.S. operates on a system of giving unchecked powers to Big Tech companies on the basis that technology can be “neutral” or “unbiased” when these beliefs have been proven to be false time and time again.

Algorithms, as Ruha Benjamin and the people interviewed in this documentary point out, are not inherently indifferent to historical biases because they do not simply propagate from the ethers of technology. Rather, algorithms have historically been fashioned by white men—a demographic known for being at the top of the hierarchy and able to exhibit the power that comes with that—who, even if they are not consciously and intentionally making these algorithms sexist and racist, still unconsciously filter in their biases. For example, regular users of technology that are white men receive higher potential scores for mortgages, healthcare, and receive lower possible crime rates compared to people of color using these same technologies. If these factors have the ability to positively or negatively influence how our lives turn out, then they should be providing an equitable analysis of the people it is surveilling rather than encouraging the historical biases that are packed into the algorithms. But so far, they are not.

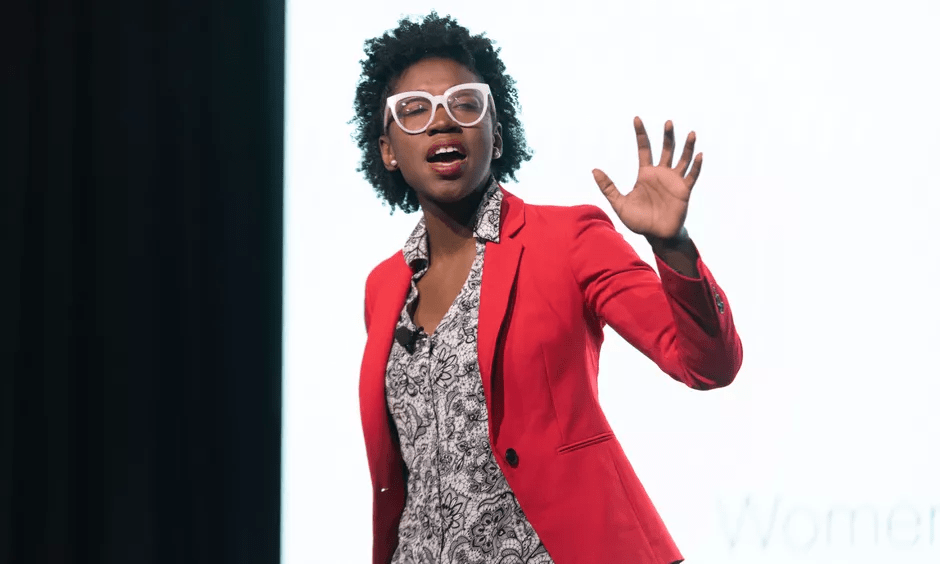

My favorite part of the documentary was when Joy Buolamwini spoke in front of Congress because you could feel the hard work and the perseverance it took for her and her team to make it to the Capitol. The joy you could see written on the faces of the tenants from Brooklyn, the women from Big Brother Watch, and Buolamwini’s friends was incredibly infectious and I won’t lie that I teared up a little bit watching that scene. You could feel the great potential for change in that moment, that maybe someday our country’s leaders can step up and actually do the jobs they were elected to do, which is to protect the American people.